This article was originally published in October 29, 2024 and updated in August 2025 to include the latest AI adoption statistics, insights on quality assurance, and key details on the EU AI Act compliance timeline.

Artificial Intelligence (AI) is no longer a futuristic concept—it’s here, and it’s transforming how we work, live, and innovate. In 2025, AI adoption is accelerating at a pace few could have imagined even two years ago. Generative AI is writing code, assisting doctors with diagnoses, powering financial decision-making, and automating customer interactions across the globe.

But with opportunity comes risk. AI is powerful, yet unpredictable. From high-profile cases of AI hallucinations to compliance challenges under the newly enforced EU AI Act, organizations are learning a hard truth: without robust Quality Assurance (QA), AI can become a liability instead of an advantage.

That’s why QA has moved from a supporting function to a strategic imperative in the AI era.

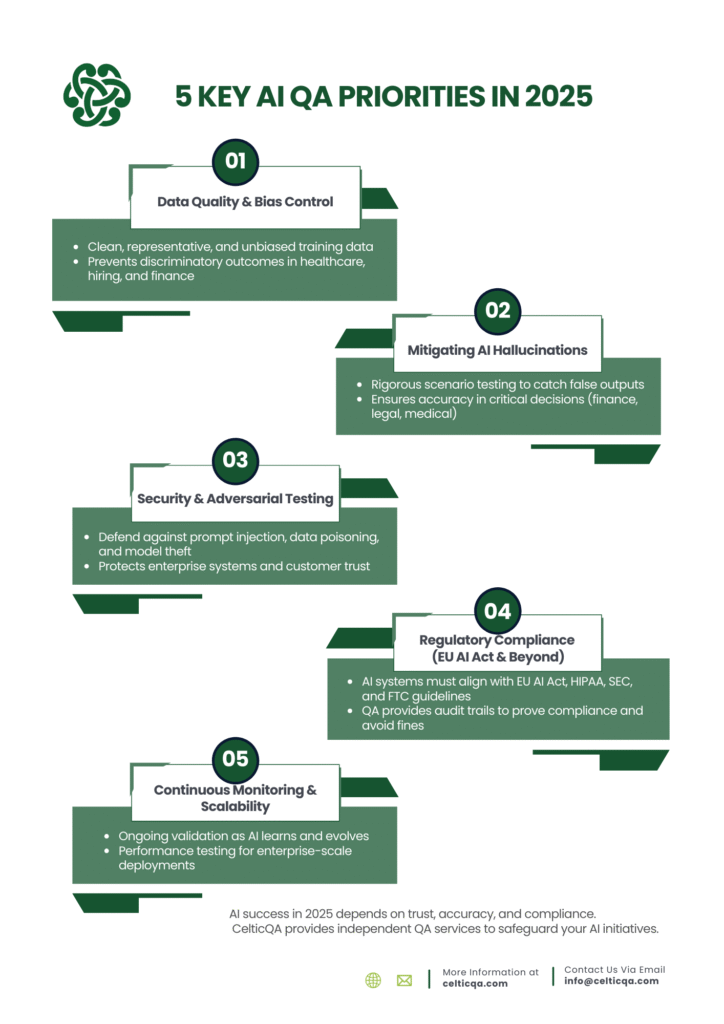

Why QA is More Critical Than Ever in 2025

The growth of AI across industries has been extraordinary. According to recent McKinsey data, reported by the Wall Street Journal, 78% of companies were already using AI in at least one function in 2024—up from 55% the year prior. Adoption is surging across healthcare, finance, and enterprise IT, with no signs of slowing down.

However, this rapid growth has revealed serious risks:

- AI hallucinations: Generative AI tools producing false or misleading outputs.

- Bias in decision-making: AI trained on skewed datasets reinforcing existing inequalities.

- Security vulnerabilities: Prompt injection, data poisoning, and adversarial attacks targeting AI systems.

- Compliance fines: Organizations facing penalties for failing to meet EU AI Act or HIPAA requirements.

The cost of neglecting QA is steep: reputational damage, regulatory penalties, and even legal consequences. In 2025, enterprises can no longer treat QA as optional—it’s the foundation of trustworthy AI.

Key QA Challenges in AI Systems

Building reliable AI systems is different from traditional software. Here’s why QA faces unique challenges in 2025:

Data Quality & Bias

AI is only as good as the data it learns from. Poor, unbalanced, or biased data can lead to discriminatory or inaccurate results—such as flawed hiring tools or misdiagnosis in healthcare systems. QA ensures datasets are representative, unbiased, and properly structured.

Model Unpredictability & Hallucinations

Unlike traditional software, AI models evolve as they process new data. This makes them unpredictable and prone to “hallucinations”—outputs that sound convincing but are wrong. QA uses scenario-based and adversarial testing to catch these issues before they cause harm.

Security & Adversarial Risks

AI introduces new attack surfaces. Threat actors can manipulate inputs to deceive AI models (adversarial attacks) or corrupt datasets through data poisoning. QA teams conduct security-focused testing to safeguard AI against these evolving threats.

Compliance & Ethics

AI is now under regulatory scrutiny. The EU AI Act entered into force in August 2024, with major obligations beginning to phase in throughout 2025 and 2026. In the U.S., sectors like healthcare, finance, and advertising face their own compliance pressures. QA ensures organizations meet these standards, protecting them from fines and reputational fallout.

The QA Approach to AI in 2025

So how do organizations rise to these challenges? A modern QA strategy for AI requires more than traditional testing. It must evolve to fit the unique dynamics of AI systems.

- Data Quality Testing: Verifying datasets are accurate, balanced, and free from hidden biases.

- Algorithm Validation: Ensuring algorithms behave as expected across conditions and remain explainable.

- Continuous Testing & Monitoring: Since AI models adapt over time, QA must be ongoing, with real-time checks for drift and degradation.

- Ethical & Bias Auditing: Regular audits to assess fairness, transparency, and alignment with ethical standards.

- Scalability & Performance Testing: Confirming AI systems can handle enterprise-scale workloads without failures.

Together, these practices build AI systems that are not just innovative—but reliable, safe, and trusted.

2025 Regulatory & Compliance Considerations

This year marks a turning point in AI governance.

- EU AI Act (2024/1689): Adopted in June 2024, in force since August 2024, with obligations for prohibited AI systems beginning in early 2025 and high-risk AI obligations rolling out by 2026.

- Healthcare AI: FDA and HIPAA guidelines demand patient data security and accurate AI-driven diagnoses.

- Finance AI: SEC regulations enforce accountability in algorithmic trading and AI-driven decision-making.

- Consumer Protection: The FTC is cracking down on misleading AI use in advertising and customer interactions.

For organizations, QA is no longer just about functionality—it’s about proving compliance. QA teams provide the documentation, testing evidence, and audit trails needed to demonstrate that AI systems meet these legal and ethical requirements.

How CelticQA Ensures AI Success

At CelticQA, we know that AI success isn’t just about speed—it’s about trust. Our independent QA services are designed to help enterprises navigate the complexities of AI adoption in 2025.

Here’s how we help:

- Independent oversight through our Quality Management Office (QMO) model.

- AI-focused testing methodologies including automated testing, adversarial analysis, and continuous monitoring.

- Tailored QA strategies for regulated industries like healthcare, finance, and enterprise IT.

- Faster ROI by reducing defects early and ensuring compliance before deployment.

By partnering with CelticQA, organizations gain more than QA—they gain confidence that their AI systems will perform reliably, ethically, and at scale.

Conclusion: QA as a Strategic Imperative

AI will continue to reshape industries in 2025 and beyond. But without quality assurance, even the most advanced AI systems risk failure, fines, and loss of trust.

The organizations that thrive will be those that embed QA at the heart of their AI strategy.

At CelticQA, we help businesses build AI systems that are accurate, compliant, and resilient—turning innovation into a sustainable advantage.

Ready to safeguard your AI initiatives? Contact CelticQA today to design a QA strategy built for the future.